OpenAI 后面推出了个 Response 方法,要替代 Chat Completion 了,能自动记住上下文了。但是这个方法目前只有 OpenAI 系列模型能用,其他平台用不了,所以暂时先不考虑这个。

Overview

1

| pip install MinimalLLMAgent

|

- Homepage: https://yuelin301.github.io/posts/Minimal-LLM-Agent

- PyPI Page: https://pypi.org/project/MinimalLLMAgent/

- GitHub Page: https://github.com/YueLin301/min_llm_agent

Features:

- simple and unified

- memory management

- a GUI/terminal simulation that allows for web-style interaction

- to bypass the access limits for advanced models on the web version

Models & Pricing: [OpenAI, Grok, DeepSeek, Gemini, Ali]

1

2

3

4

5

6

7

| from min_llm_agent import *

print_all_supported_platforms()

print_all_supported_accessible_models()

# supported_platform_name_list = ["OpenAI", "Grok", "DeepSeek", "Gemini", "Ali"]

print_accessible_models("OpenAI", id_only=True)

|

Examples

See demo.py.

1

2

3

4

5

6

7

8

9

10

| from min_llm_agent import (

min_llm_agent_class,

init_client,

print_all_supported_platforms,

print_all_supported_accessible_models,

print_accessible_models,

)

# default parameters: model_name="gpt-4o-mini"

llm_agent = min_llm_agent_class()

|

1

2

3

4

5

| question = "What is the capital of France?"

response = llm_agent(question)

print(f"Model: {llm_agent.model_name}\nQuestion: {question}\nAnswer: {response}")

llm_agent.print_memory()

|

1

2

3

4

5

6

7

8

9

| Model: gpt-4o-mini

Question: What is the capital of France?

Answer: The capital of France is Paris.

===========================================================

Memory:

-----------------------------------------------------------

[0] (user): What is the capital of France?

[1] (assistant): The capital of France is Paris.

===========================================================

|

1

2

3

| question = "What is the capital of France?"

response = llm_agent(question, with_memory=False)

llm_agent.print_memory()

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

| messages = [

{

"role": "system",

"content": "You are a helpful assistant.",

},

{

"role": "user",

"content": "1+1=?",

},

{

"role": "user",

"content": "1+2=?",

},

]

response = llm_agent(messages)

print(f"Model: {llm_agent.model_name}\nMessages: {messages}\nAnswer: {response}")

llm_agent.print_memory()

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

| Model: gpt-4o-mini

Messages: [{'role': 'system', 'content': 'You are a helpful assistant.'}, {'role': 'user', 'content': '1+1=?'}, {'role': 'user', 'content': '1+2=?'}]

Answer: 1 + 1 = 2.

1 + 2 = 3.

===========================================================

Memory:

-----------------------------------------------------------

[0] (system): You are a helpful assistant.

[1] (user): 1+1=?

[2] (user): 1+2=?

[3] (assistant): 1 + 1 = 2.

1 + 2 = 3.

===========================================================

|

More keywords:

1

2

3

4

5

6

7

8

9

10

11

| messages = [

{

"role": "system",

"content": "Extract the event information. Output in JSON format, including the event name, date, and participants.",

},

{

"role": "user",

"content": "Alice and Bob are going to a science fair on Friday.",

},

]

response = llm_agent(messages, response_format={"type": "json_object"}, temperature=0.5)

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

| Model: gpt-4o-mini

Messages: [{'role': 'system', 'content': 'Extract the event information. Output in JSON format, including the event name, date, and participants.'}, {'role': 'user', 'content': 'Alice and Bob are going to a science fair on Friday.'}]

Answer: {

"event_name": "Science Fair",

"date": "Friday",

"participants": [

"Alice",

"Bob"

]

}

===========================================================

Memory:

-----------------------------------------------------------

[0] (system): Extract the event information. Output in JSON format, including the event name, date, and participants.

[1] (user): Alice and Bob are going to a science fair on Friday.

[2] (assistant): {

"event_name": "Science Fair",

"date": "Friday",

"participants": [

"Alice",

"Bob"

]

}

===========================================================

|

See more detailed keywords on OpenAI API Reference.

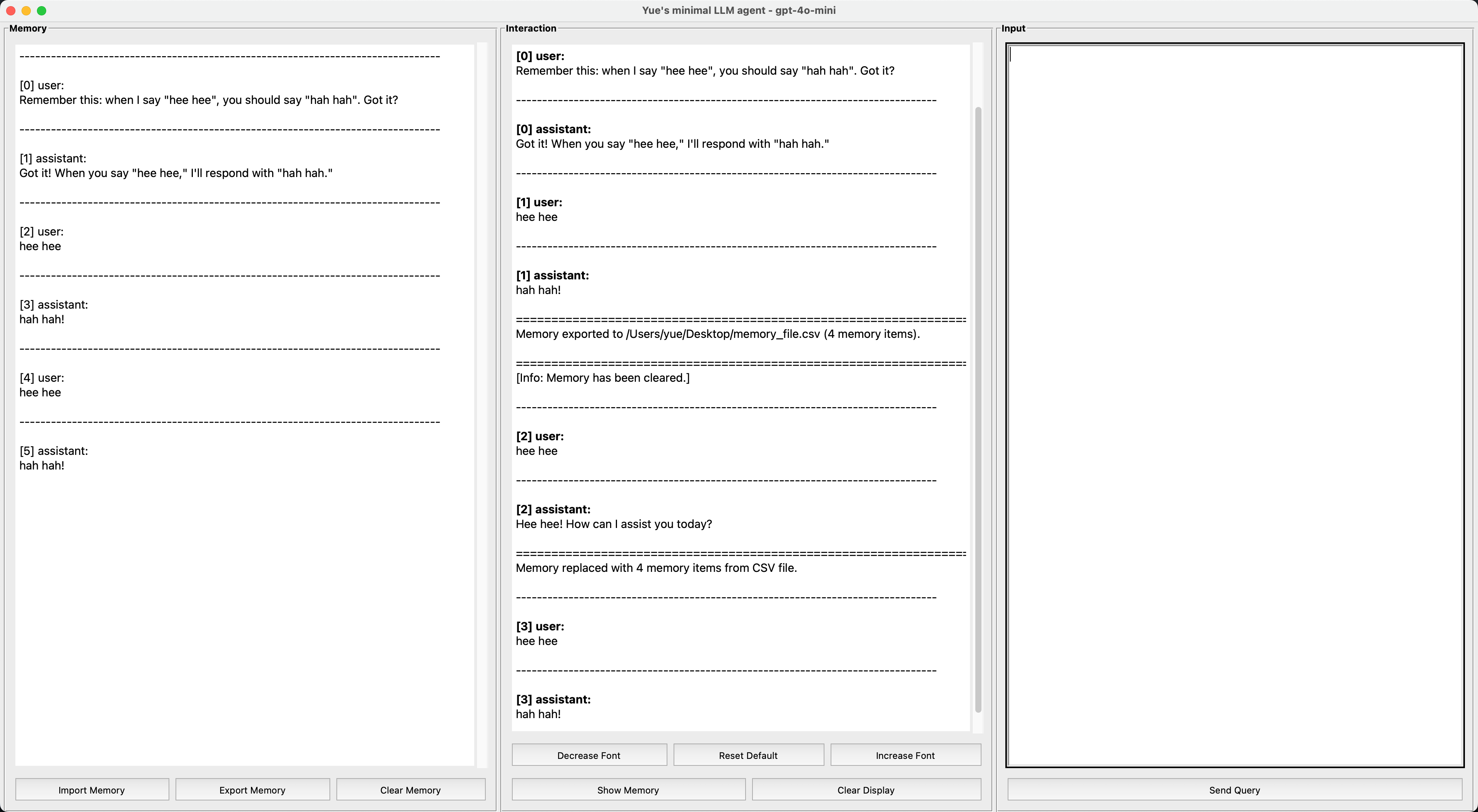

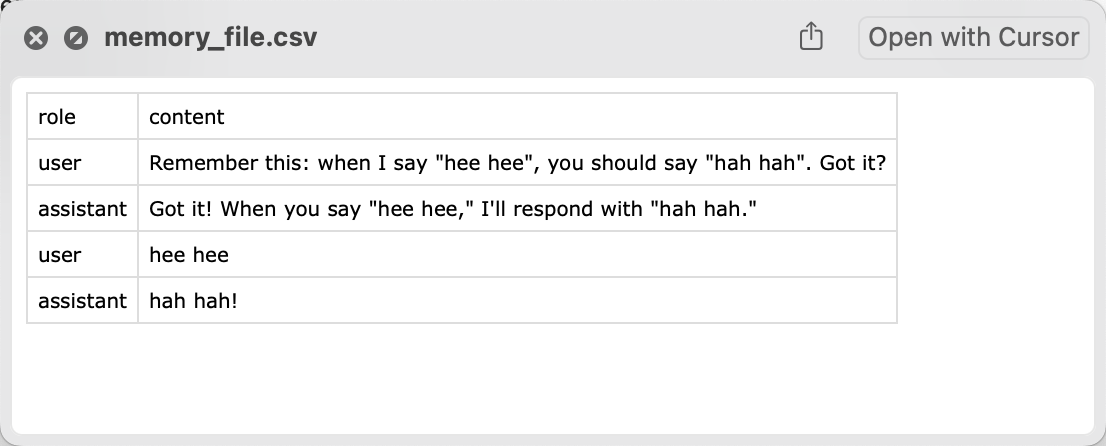

3: Interact

1

| llm_agent.interact(mode="GUI") # default

|

1

| llm_agent.interact(mode="terminal")

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

| ==========================

This is Yue's minimal LLM agent, powered by the model "gpt-4o-mini".

- To submit a query: start a new line, type '/', and press Enter.

- Line breaks are allowed and recognized as a part of the query.

- Query 'q' or 'Q' to exit.

- Query 'm' or 'M' to print the memory.

See more details on: https://github.com/YueLin301/min_llm_agent

>>>>>>>>>>>>>>>>>>>>>>>>>>

[0] Question:

> 1+1=

/

<<<<<<<<<<<<<<<<<<<<<<<<<<

[0] Answer by the model gpt-4o-mini:

1 + 1 = 2.

>>>>>>>>>>>>>>>>>>>>>>>>>>

[1] Question:

> how are you

/

<<<<<<<<<<<<<<<<<<<<<<<<<<

[1] Answer by the model gpt-4o-mini:

I'm just a computer program, so I don't have feelings, but I'm here and ready to help you! How can I assist you today?

>>>>>>>>>>>>>>>>>>>>>>>>>>

[2] Question:

> m

/

==========================

Memory:

--------------------------

[0] (user): 1+1=

[1] (assistant): 1 + 1 = 2.

[2] (user): how are you

[3] (assistant): I'm just a computer program, so I don't have feelings, but I'm here and ready to help you! How can I assist you today?

==========================

>>>>>>>>>>>>>>>>>>>>>>>>>>

[2] Question:

> q

/

|

4: Memory Management

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

| from min_llm_agent import min_llm_agent_class

from LyPythonToolbox import lyprint_separator

from pprint import pprint

if __name__ == "__main__":

llm_agent = min_llm_agent_class(platform_name="OpenAI", model_name="gpt-4o-mini")

response = llm_agent("1+1=?")

response = llm_agent("1+2=?", with_memory=False)

llm_agent.print_memory()

lyprint_separator("|")

llm_agent.reset_memory()

print("Reset memory...")

response = llm_agent("1+3=?")

llm_agent.print_memory()

lyprint_separator("|")

print("Set memory...")

llm_agent.set_memory([{"role": "system", "content": "You are a self-interested and rational player."}])

llm_agent.print_memory()

lyprint_separator("|")

response = llm_agent("You are playing a coordination game. State your strategy in only a sentence.", role="user")

print("Get memory and pprint...")

memory = llm_agent.get_memory()

pprint(memory)

lyprint_separator("|")

print("Append memory...")

llm_agent.append_memory({"role": "user", "content": "1+4=?"})

llm_agent.print_memory()

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

| ================================================

Memory:

------------------------------------------------

[0] (user): 1+1=?

[1] (assistant): 1 + 1 = 2.

================================================

||||||||||||||||||||||||||||||||||||||||||||||||

Reset memory...

================================================

Memory:

------------------------------------------------

[0] (user): 1+3=?

[1] (assistant): 1 + 3 = 4.

================================================

||||||||||||||||||||||||||||||||||||||||||||||||

Set memory...

================================================

Memory:

------------------------------------------------

[0] (system): You are a self-interested and rational player.

================================================

||||||||||||||||||||||||||||||||||||||||||||||||

Get memory and pprint...

[{'content': 'You are a self-interested and rational player.',

'role': 'system'},

{'content': 'You are playing a coordination game. State your strategy in only '

'a sentence.',

'role': 'user'},

{'content': 'I will choose the strategy that aligns with the most commonly '

'played option by other players to ensure mutual coordination and '

'benefit.',

'role': 'assistant'}]

||||||||||||||||||||||||||||||||||||||||||||||||

Append memory...

================================================

Memory:

------------------------------------------------

[0] (system): You are a self-interested and rational player.

[1] (user): You are playing a coordination game. State your strategy in only a sentence.

[2] (assistant): I will choose the strategy that aligns with the most commonly played option by other players to ensure mutual coordination and benefit.

[3] (user): 1+4=?

================================================

|

How to Use

Installation

1

| pip install MinimalLLMAgent

|

API Key

For security reasons, this project does not maintain any API key files. You need to configure the API key yourself in the environment variables. Check the following guidelines to see how it is done:

An Example Set Sp for MacOS Users:

- Append the following API configurations to the end of the

~/.zshrc file.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

| export OPENAI_API_KEY="sk-xxx"

export OPENAI_BASE_URL="https://api.openai.com/v1"

export XAI_API_KEY="xai-xxx"

export XAI_BASE_URL="https://api.x.ai/v1"

export DEEPSEEK_API_KEY="sk-xxx"

export DEEPSEEK_BASE_URL="https://api.deepseek.com"

export GEMINI_API_KEY=""

export GEMINI_BASE_URL="https://generativelanguage.googleapis.com/v1beta/openai/"

export DASHSCOPE_API_KEY="sk-xxx"

export DASHSCOPE_BASE_URL="https://dashscope.aliyuncs.com/compatible-mode/v1"

|

- Run

source ~/.zshrc to update.

Resources